How to Design a Chat System at Scale

Requirements

Functional Requirements

- One-to-One Chat:

- Real-time online message delivery.

- Reliable offline message storage and retrieval.

- Group Chat:

- Support for small to medium groups (< 200 members).

- Presence Status:

- Real-time indicators for Online/Offline/Busy states.

Non-functional Requirements

- Scalability: Support 1 Billion registered users.

- Low Latency: End-to-end latency < 500ms (aiming for near real-time).

- High Consistency: Messages must be delivered in order (causal consistency) and exactly once.

- Fault Tolerance: The system must be resilient to server failures without data loss.

Communication Protocols (Message Model)

To achieve low latency and bi-directional communication, we compare the following mechanisms:

- Polling: Client periodically requests updates. Inefficient due to high overhead and latency.

- Long Polling: Client holds connection until data is available. Better than polling, but still resource-heavy on server headers/handshakes.

- WebSocket (Recommended): Persistent, full-duplex TCP connection. Ideal for real-time messaging.

- QUIC / HTTP/3: UDP-based transport with congestion control. Excellent for unstable networks (mobile clients) and reducing head-of-line blocking.

Decision: WebSocket is the industry standard for the connection layer due to its widespread support and efficiency for stateful connections.

Architecture & Scalability

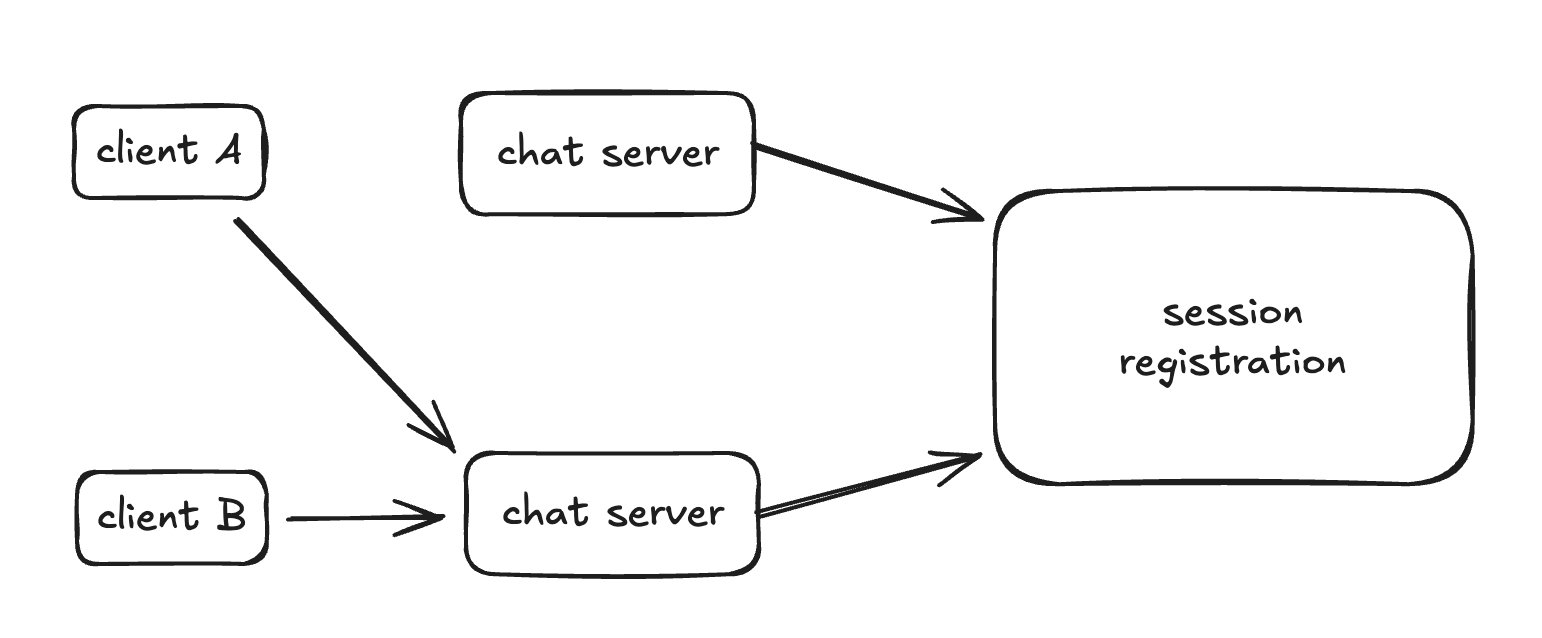

Connection Management (Session Affinity)

To ensure low latency, we need a stateful connection layer (Gateway/Chat Server). A user’s WebSocket connection must persist on a specific server.

Service Discovery & Session Storage:

We use a centralized Key-Value store (e.g., Redis) to map users to their physical servers.

- Data Structure:

<user_id, {last_heartbeat: timestamp, chat_server_id, ...}> - Workflow: When User A wants to send a message to User B, the router looks up User B’s

chat_server_idin Redis to forward the payload.

Capacity Planning (Memory for Sessions):

- Total Users: 1B

- Daily Active Users (DAU): 1B * 20% = 200M

- Peak Concurrent Users: 200M * 25% = 50M

- Memory Usage per User Session: approx 100 bytes (fd, ip_addr, port, state, user_id…)

- Total Memory Required: 50M * 100 bytes = 5GB

Note: 5GB fits easily into the RAM of a modern Redis cluster.

Message Synchronization & Throughput

To decouple message ingestion from delivery and storage, we introduce a Message Queue (e.g., Kafka) between the connection layer and the backend logic.

Throughput Calculation (Peak Time):

- Average message rate: 0.2 msg/sec/user

- Total Throughput: 50M users * 0.2 = 10M msg/s

- Message Size:

- Header (IDs): 8 * 3 = 24 bytes

- Content: 600-800 bytes

- Total (Compressed): approx 0.5KB

- Bandwidth: 10M msg/s * 0.5KB = 5GB/s

Scaling Strategy:

- Sharding: Since 5GB/s is high for a single cluster, we must shard the Message Queue by

user_id(orgroup_idfor groups) to ensure ordering while distributing load. - Server Count:

- Benchmark: WhatsApp (Erlang-based) achieved 2M concurrent connections per server.

- Required Chat Servers: 50M / 2M = 25 Servers.

- Result: This is highly manageable with modern C++ asynchronous I/O (epoll/io_uring).

Global Distribution (Edge Aggregation)

To handle the 5GB/s global traffic and reduce latency for cross-region users, we use Edge Aggregation.

- Mechanism: Users connect to the nearest Edge Node. Edge Nodes aggregate messages and send batch requests to the Central Data Center (or cross-region sync).

- Edge Capacity:

- Assume one region handles 2M users.

- Traffic: 2M * 0.2 * 0.5KB = 200MB/s.

- Feasibility: A single modern server with 10Gbps NIC can handle this comfortably.

Group Chat Design

Group chats introduce a “Fan-out” problem (1 sender, N receivers).

Data Model:

|

|

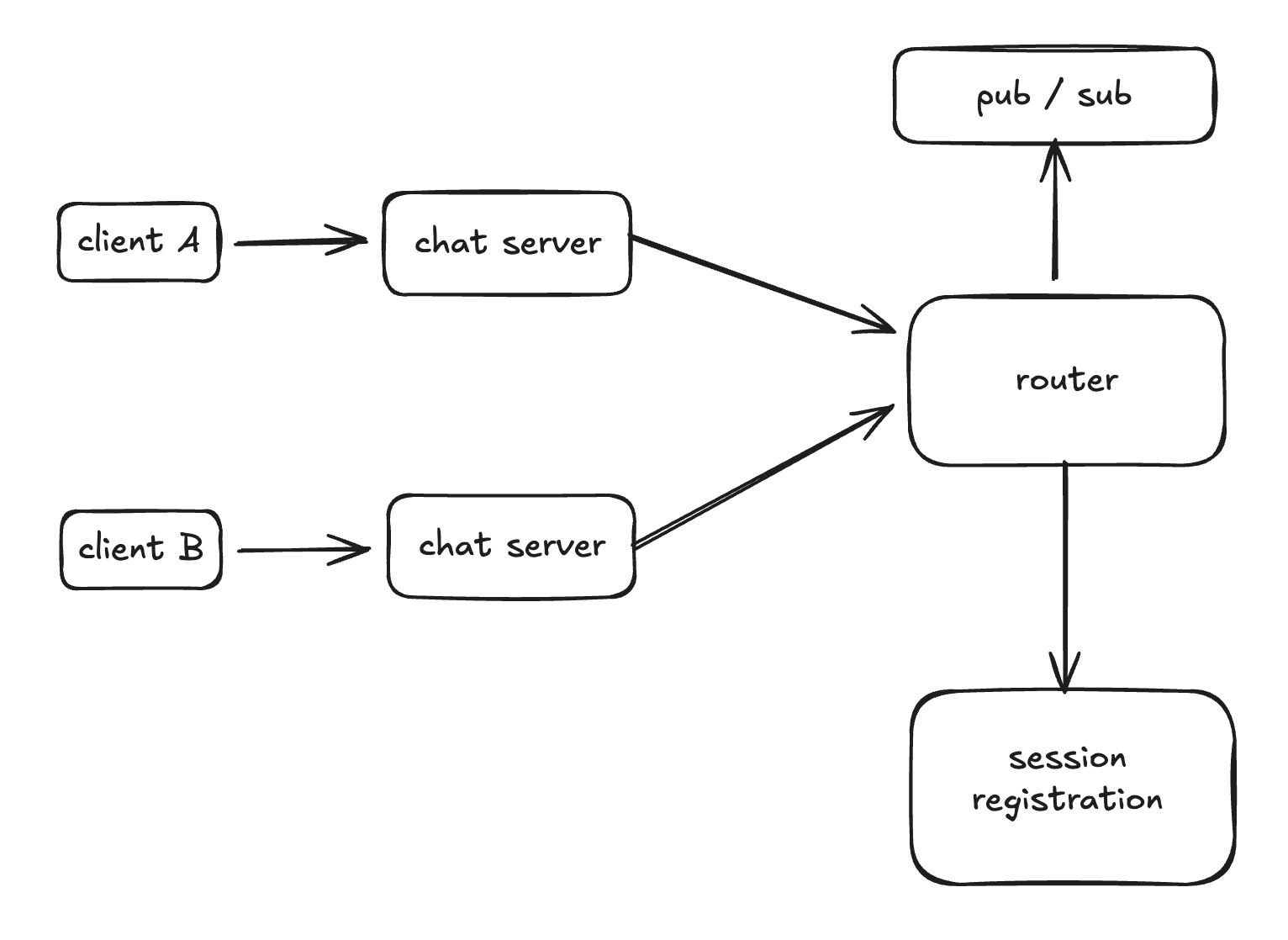

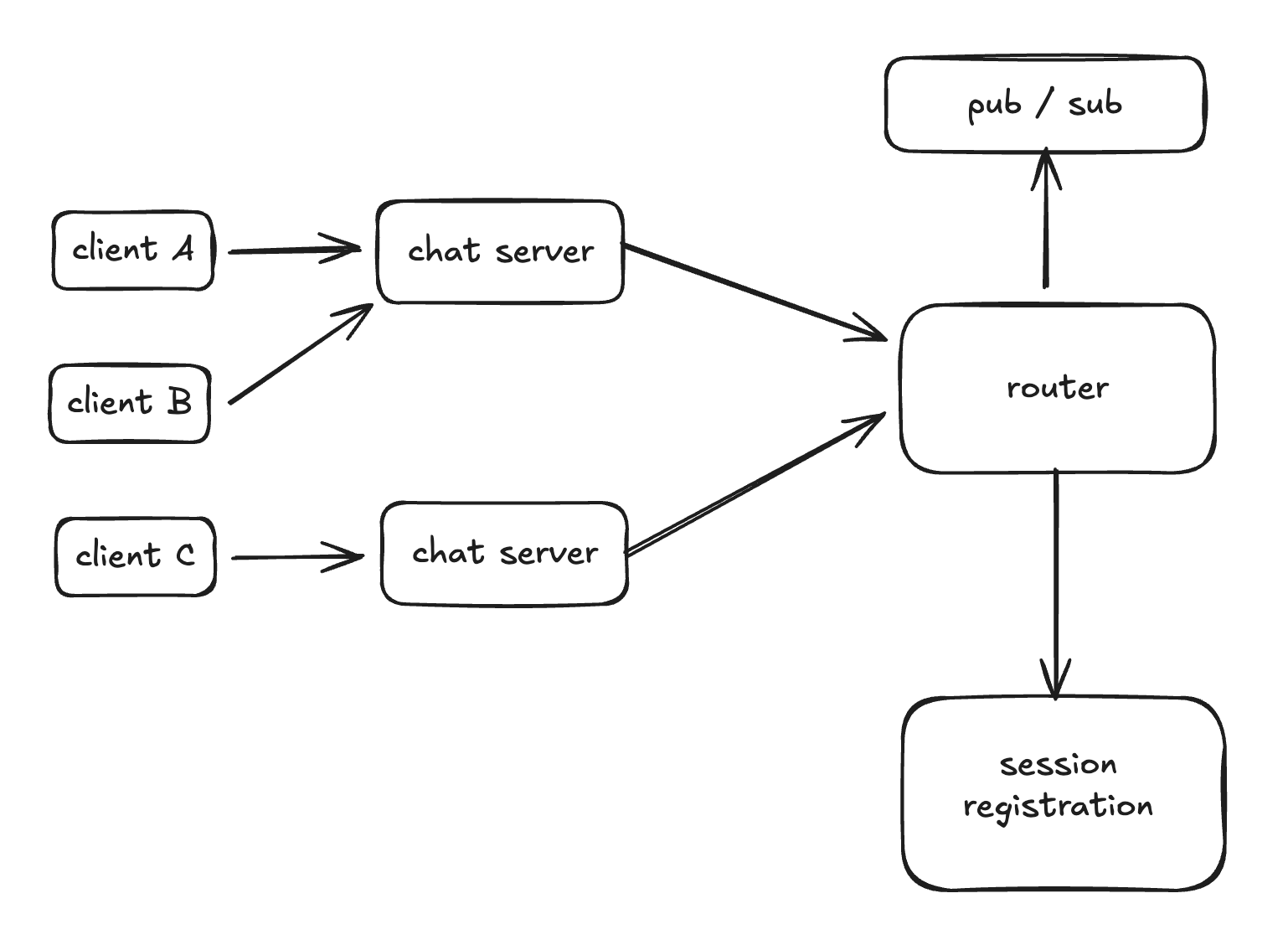

Routing Strategy:

Instead of broadcasting to all users immediately, we use a Group Register/Service.

- Group Registration: Records which Chat Servers host online members of a specific group.

- Delivery: The message is routed only to those specific Chat Servers.

- Optimization: For large groups, limit the fan-out by only pushing notifications to active members, while others pull on demand.

Architectural Patterns:

- Push Model / Write Fanout

- Suitable for small groups and real-time communication.

- Mechanism: When a user sends a message to a group, the message is pushed to all online members of the group.

- Pros: Fast read operations, users only need to read from their inbox.

- Cons: High write overhead, the more members in a group, the more messages need to be pushed.

- Pull Model / Read Fanout

- Suitable for large groups and offline messages.

- Mechanism: When a user sends a message to a group, the message is pushed to the group register/service, and the members will pull the messages from the group register/service on demand.

- Pros: Fast write operations, users only need to write to the group register/service.

- Cons: High read overhead, the more members in a group, the more messages need to be pulled.

Storage & Offline Buffering

We need a tiered storage strategy: fast write for recent messages and cost-effective storage for history.

Capacity Planning (Storage):

- Total Messages/Day: 200M DAU * 40 msg/user = 8B messages

- Offline Message Ratio: 15%

- Daily Storage: 8B * 15% * 0.5KB = 0.6TB / Day

- Monthly Storage: 0.6TB * 30 = 18TB

Technology Choice:

- Hot Data (Recent): NoSQL (Cassandra / DynamoDB / HBase). Optimized for write-heavy workloads and time-series data.

- Cold Data (History): Object Storage (S3) or Compressed Archives.

- Offline Buffer: Redis Streams or temporary KV storage to hold messages until the user comes online.